If you love gardening, you know how demanding

A straightforward gardening task, let’s say bush trimming, involves three basic steps. First, the gardener detects the bush, then s/he approaches it, and

When traditional formulas fail

Nowadays, machine learning is at the core of

Convolutional Neural Networks (

Throwing light upon the garden

Recall the task of bush trimming. The first step is to detect the bush. However, the imaging conditions of gardens may vary significantly over, even small, periods of time influencing the appearance of garden objects and scenes. The incoming light to a garden scene is constantly changing due to the weather conditions and the position of the sun and clouds. As a result, garden objects may contain shadows, specular highlights, and illumination changes. Those changes affect the pixel (RGB) values of images such that the appearance of a bush, for example, fluctuates during the day: sometimes it is brighter due to direct sunlight, others it appears dimmer due to shadows. People distinguish those changes easily, but computers just see numbers (RGB values) without any context. So, for a CNN to recognize a bush, it needs to examine quite a number of garden images that include lighting variation. Therefore, instead of analyzing countless images, a full illumination invariant representation can help CNN to ignore those illumination effects.

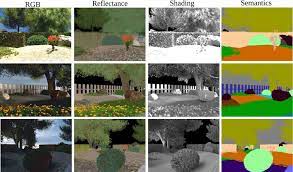

To achieve this, we need to break down the images into smaller parts such as reflectance (albedo) and shading (illumination). This method is called intrinsic image decomposition and it is based on the assumption that scenes are basically composed of object shapes and their interactions with the light and their material

An image is decomposed such that pixel-wise multiplication of albedo and shading components gives the original image back. Therefore, manipulating only the colors or only the light effects of a scene becomes possible. Furthermore, it allows us to recreate new images by individually manipulating these color and light effects, which is a useful feature for photo-realistic editing tasks, for instance. In the Computer Vision Lab, we study means to create real-time algorithms that are not affected by outdoor illumination [3].

How to pilote a robot

The next task for TrimBot2020 is to detect and reach the bushes without hitting any obstacles. Remember that robots only observe numeric pixel (RGB) values. For a robot to detect its surroundings, we use semantic segmentation, a process that involves grouping and labeling all pixels of an image.

Because changes in the illumination alter the pixel values of an image, they may have a negative influence on the segmentation. For example, in Figure 2, the appearance of the bushes in the first and last rows are quite different because of the different illumination effects. But we know that the albedo image is invariant to illumination and thus we can use it for segmentation tasks. Moreover, semantic segmentation may be beneficial for albedo prediction. Each label constrains the

To achieve that, we design a CNN architecture that is capable of jointly learning intrinsic image decomposition (Fig. 3) and semantic segmentation (Fig. 4) by using the synthetic garden dataset. The results show mutual benefits when performing two tasks in joint manner for natural scenes. In this way, TrimBot2020 is able to make decisions based on pixel labels. For example, if there is an obstacle, such as a rock or a hole, on the path, it can avoid it or detect and approach a bush to trim it [4].

Robotics technology is expected to be dominant in the coming decade. According to a report from the International Federation of Robotics, the number of household domestic robots will hit 31 million by the end of 2019; floor cleaning robots, lawn mowers and edutainment (a word introduced in the nineties to describe education and entertainment tasks) robots currently have the biggest market share but the next generation of robots will very likely have greater capabilities, especially in the interaction with humans and the environment.

Robotic kitchen assistants, home-butlers, pool cleaners, laundry folders, and many other autonomous intelligent robots to help us with our daily chores are around the corner. As for non-domestic fields, autonomous security robots and self-driving vehicles for local goods transportation have already been deployed in the U.S. Additionally, agricultural robotic systems are already vastly used in the Netherlands and all around the world for horticulture, weed control, harvesting, and fresh supply chains. As for the TrimBot2020, the motion and manipulation skills and the perception of plants and obstacles under varied weather conditions are clear. At the Computer Vision Lab, the expectation is that the TrimBot project will increase Europe’s market share in domestic service robots and will improve technology readiness levels of robotics technologies.

References:

[1] N. Strisciuglio, R. Tylecek, M. Blaich, N. Petkov, P. Biber, J. Hemming, E. van Henten, T. Sattler, M. Pollefeys, T. Gevers, T. Brox, and R. B. Fisher. Trimbot2020: an outdoor robot for automatic gardening. In International Symposium on Robotics, 2018.

[2] H. G. Barrow and J. M. Tenenbaum. Recovering intrinsic scene characteristics from images. Computer Vision Systems, pages 3–26, 1978.

[3] A. S. Baslamisli, T. T. Groenestege, P. Das, H. A. Le,S. Karaoglu, and T. Gevers. Joint learning of intrinsic images and semantic segmentation. In European Conference on Computer Vision, 2018.

[3] A. S. Baslamisli, H. A. Le, and T. Gevers. Cnn based learning using reflection and